标签:RUN mmcls -- apt server mmdet TorchServe model docker

官方教程:模型部署至 TorchServe — MMClassification 0.23.2 文档

mmcls

1. 转换 MMClassification 模型至 TorchServe

python tools/deployment/mmcls2torchserve.py ${CONFIG_FILE} ${CHECKPOINT_FILE} \

--output-folder ${MODEL_STORE} \

--model-name ${MODEL_NAME}

${MODEL_STORE} 需要是一个文件夹的绝对路径。

示例:

python tools/deployment/mmcls2torchserve.py configs/resnet/resnet50_8xb256-rsb-a1-600e_in1k.py checkpoints/resnet50_8xb256-rsb-a1-600e_in1k.pth --output-folder ./checkpoints/ --model-name resnet50_8xb256-rsb-a1-600e_in1k

2. 构建 mmcls-serve docker 镜像

cd mmclassification

docker build -t mmcls-serve docker/serve/

修改mmcls/docker/serve/Dockerfile,为下面内容。主要是在 apt-get update 前面添加了下面几句:

RUN sed -i s@/archive.ubuntu.com/@/mirrors.aliyun.com/@g /etc/apt/sources.list

RUN apt-get clean

RUN rm /etc/apt/sources.list.d/cuda.list

第一行是添加国内源,第二行是修复nvidia更新密钥问题。更新 CUDA Linux GPG 存储库密钥 - NVIDIA 技术博客

RUN sed -i s@/archive.ubuntu.com/@/mirrors.aliyun.com/@g /etc/apt/sources.list

RUN apt-get clean

RUN rm /etc/apt/sources.list.d/cuda.list

⚠️ 不加上边几行会报错:

W: GPG error: https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/ InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY A4B469963BF863CC

E: The repository 'https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64 InRelease' is no longer signed.

新的Dockerfile内容:

ARG PYTORCH="1.8.1"

ARG CUDA="10.2"

ARG CUDNN="7"

FROM pytorch/pytorch:${PYTORCH}-cuda${CUDA}-cudnn${CUDNN}-devel

ARG MMCV="1.4.2"

ARG MMCLS="0.23.2"

ENV PYTHONUNBUFFERED TRUE

RUN sed -i s@/archive.ubuntu.com/@/mirrors.aliyun.com/@g /etc/apt/sources.list

RUN apt-get clean

RUN rm /etc/apt/sources.list.d/cuda.list

RUN apt-get update && \

DEBIAN_FRONTEND=noninteractive apt-get install --no-install-recommends -y \

ca-certificates \

g++ \

openjdk-11-jre-headless \

# MMDet Requirements

ffmpeg libsm6 libxext6 git ninja-build libglib2.0-0 libsm6 libxrender-dev libxext6 \

&& rm -rf /var/lib/apt/lists/*

ENV PATH="/opt/conda/bin:$PATH"

RUN export FORCE_CUDA=1

# TORCHSEVER

RUN pip install torchserve torch-model-archiver

# MMLAB

ARG PYTORCH

ARG CUDA

RUN ["/bin/bash", "-c", "pip install mmcv-full==${MMCV} -f https://download.openmmlab.com/mmcv/dist/cu${CUDA//./}/torch${PYTORCH}/index.html"]

RUN pip install mmcls==${MMCLS}

RUN useradd -m model-server \

&& mkdir -p /home/model-server/tmp

COPY entrypoint.sh /usr/local/bin/entrypoint.sh

RUN chmod +x /usr/local/bin/entrypoint.sh \

&& chown -R model-server /home/model-server

COPY config.properties /home/model-server/config.properties

RUN mkdir /home/model-server/model-store && chown -R model-server /home/model-server/model-store

EXPOSE 8080 8081 8082

USER model-server

WORKDIR /home/model-server

ENV TEMP=/home/model-server/tmp

ENTRYPOINT ["/usr/local/bin/entrypoint.sh"]

CMD ["serve"]

3. 运行 mmcls-serve 镜像

请参考官方文档 基于 docker 运行 TorchServe.

为了使镜像能够使用 GPU 资源,需要安装 nvidia-docker。之后可以传递 --gpus 参数以在 GPU 上运。

示例:

docker run --rm \

--cpus 8 \

--gpus device=0 \

-p8080:8080 -p8081:8081 -p8082:8082 \

--mount type=bind,source=`realpath ./checkpoints`,target=/home/model-server/model-store \

mmcls-serve:latest

实例

docker run --rm --gpus 2 -p8080:8080 -p8081:8081 -p8082:8082 --mount type=bind,source=/home/xbsj/gaoying/mmdetection/checkpoints,target=/home/model-server/model-store mmcls-serve

备注

realpath ./checkpoints 是 “./checkpoints” 的绝对路径,你可以将其替换为你保存 TorchServe 模型的目录的绝对路径。

参考 该文档 了解关于推理 (8080),管理 (8081) 和指标 (8082) 等 API 的信息。

4. 测试部署

curl http://127.0.0.1:8080/predictions/${MODEL_NAME} -T demo/demo.JPEG

示例:

curl http://127.0.0.1:8080/predictions/resnet50_8xb256-rsb-a1-600e_in1k -T demo/demo.JPEG

您应该获得类似于以下内容的响应:

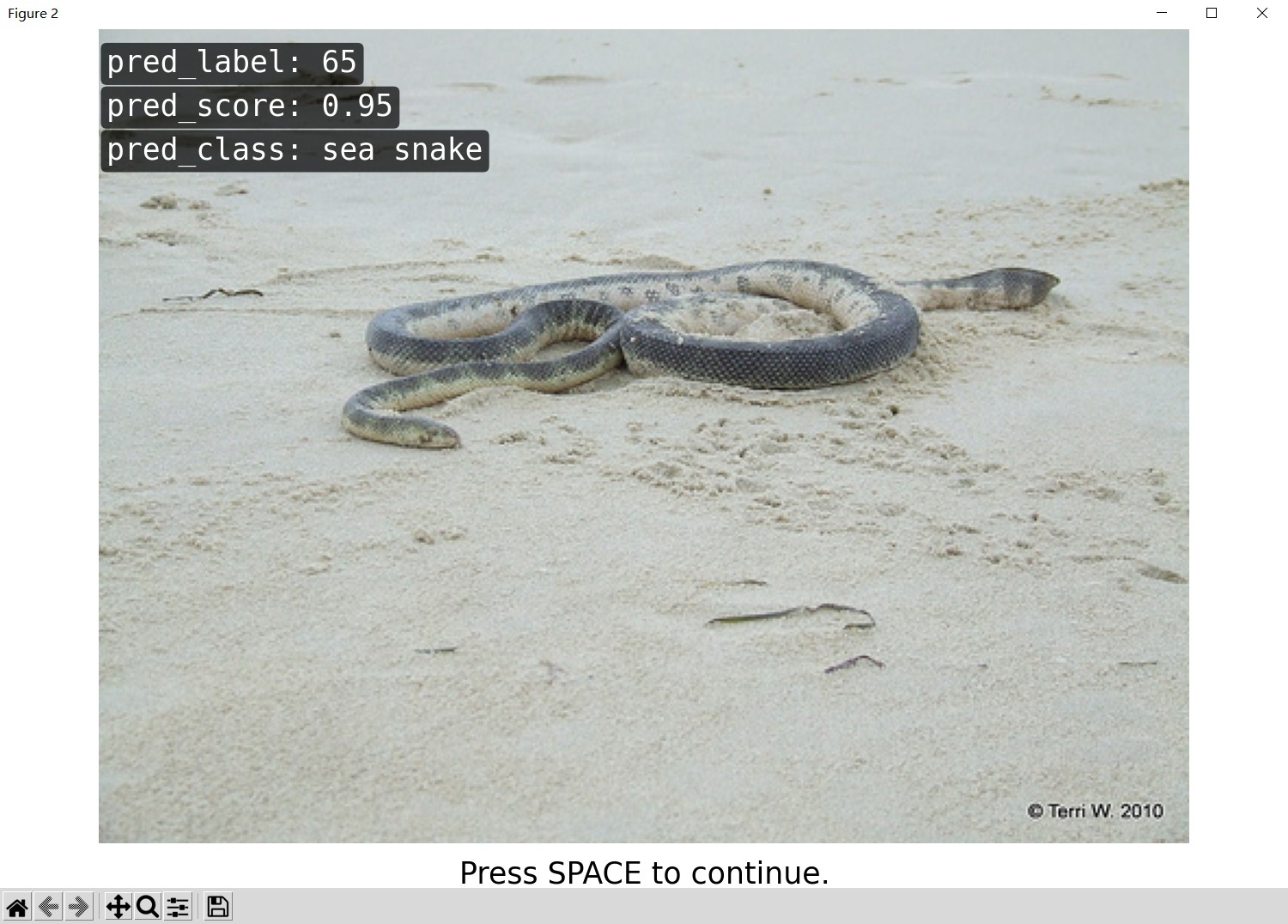

{

"pred_label": 65,

"pred_score": 0.9548004269599915,

"pred_class": "sea snake"

}

另外,你也可以使用 test_torchserver.py 来比较 TorchServe 和 PyTorch 的结果,并进行可视化。

python tools/deployment/test_torchserver.py ${IMAGE_FILE} ${CONFIG_FILE} ${CHECKPOINT_FILE} ${MODEL_NAME}

[--inference-addr ${INFERENCE_ADDR}] [--device ${DEVICE}]

示例:

python tools/deployment/test_torchserver.py demo/demo.JPEG configs/resnet/resnet50_8xb256-rsb-a1-600e_in1k.py checkpoints/resnet50_8xb256-rsb-a1-600e_in1k.pth resnet50_8xb256-rsb-a1-600e_in1k

可视化结果展示:

|

|

|---|

docker终止运行的服务:

docker container ps # 查看正在运行的容器

docker container stop ${container id} # 根据容器id终止运行的容器

mmdet

1. 转换 MMDetection 模型至 TorchServe

python tools/deployment/mmdet2torchserve.py ${CONFIG_FILE} ${CHECKPOINT_FILE} \

--output-folder ${MODEL_STORE} \

--model-name ${MODEL_NAME}

${MODEL_STORE} 需要是一个文件夹的绝对路径。

示例:

python tools/deployment/mmdet2torchserve.py configs/yolox/yolox_s_8x8_300e_coco.py checkpoints/yolox_s_8x8_300e_coco.pth --output-folder ./checkpoints --model-name yolox_s_8x8_300e_coco

2. 构建 mmcls-serve docker 镜像

cd mmdet

docker build -t mmdet-serve docker/serve/

修改mmdet/docker/serve/Dockerfile,为下面内容。主要是在 apt-get update 前面添加了下面几句:

第一行是添加国内源,第二行是修复nvidia更新密钥问题。更新 CUDA Linux GPG 存储库密钥 - NVIDIA 技术博客

RUN sed -i s@/archive.ubuntu.com/@/mirrors.aliyun.com/@g /etc/apt/sources.list

RUN apt-get clean

RUN rm /etc/apt/sources.list.d/cuda.list

⚠️ 不加上边几行会报错:

W: GPG error: https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/ InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY A4B469963BF863CC

E: The repository 'https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64 InRelease' is no longer signed.

新的Dockerfile内容:

ARG PYTORCH="1.6.0"

ARG CUDA="10.1"

ARG CUDNN="7"

FROM pytorch/pytorch:${PYTORCH}-cuda${CUDA}-cudnn${CUDNN}-devel

ARG MMCV="1.3.17"

ARG MMDET="2.23.0"

ENV PYTHONUNBUFFERED TRUE

RUN sed -i s@/archive.ubuntu.com/@/mirrors.aliyun.com/@g /etc/apt/sources.list

RUN apt-get clean

RUN rm /etc/apt/sources.list.d/cuda.list

RUN apt-get update && \

DEBIAN_FRONTEND=noninteractive apt-get install --no-install-recommends -y \

ca-certificates \

g++ \

openjdk-11-jre-headless \

# MMDet Requirements

ffmpeg libsm6 libxext6 git ninja-build libglib2.0-0 libsm6 libxrender-dev libxext6 \

&& rm -rf /var/lib/apt/lists/*

ENV PATH="/opt/conda/bin:$PATH"

RUN export FORCE_CUDA=1

# TORCHSEVER

RUN pip install torchserve torch-model-archiver nvgpu -i https://pypi.tuna.tsinghua.edu.cn/simple/

# MMLAB

ARG PYTORCH

ARG CUDA

RUN ["/bin/bash", "-c", "pip install mmcv-full==${MMCV} -f https://download.openmmlab.com/mmcv/dist/cu${CUDA//./}/torch${PYTORCH}/index.html -i https://pypi.tuna.tsinghua.edu.cn/simple/"]

RUN pip install mmdet==${MMDET} -i https://pypi.tuna.tsinghua.edu.cn/simple/

RUN useradd -m model-server \

&& mkdir -p /home/model-server/tmp

COPY entrypoint.sh /usr/local/bin/entrypoint.sh

RUN chmod +x /usr/local/bin/entrypoint.sh \

&& chown -R model-server /home/model-server

COPY config.properties /home/model-server/config.properties

RUN mkdir /home/model-server/model-store && chown -R model-server /home/model-server/model-store

EXPOSE 8080 8081 8082

## 清理安装文件缓存

#RUN apt-get clean \

#&& conda clean -y --all \

#&& rm -rf /tmp/* /var/tmp/* \

#&& rm -rf ~/.cache/pip/* \

#&& rm -rf /var/lib/apt/lists/*

USER model-server

WORKDIR /home/model-server

ENV TEMP=/home/model-server/tmp

ENTRYPOINT ["/usr/local/bin/entrypoint.sh"]

CMD ["serve"]

3. 运行 mmcls-serve 镜像

请参考官方文档 基于 docker 运行 TorchServe.

为了使镜像能够使用 GPU 资源,需要安装 nvidia-docker。之后可以传递 --gpus 参数以在 GPU 上运。

示例:

docker run --rm \

--cpus 8 \

--gpus device=0 \

-p8080:8080 -p8081:8081 -p8082:8082 \

--mount type=bind,source=`realpath ./checkpoints`,target=/home/model-server/model-store \

mmcls-serve:latest

实例

docker run --rm --gpus 2 -p8080:8080 -p8081:8081 -p8082:8082 --mount type=bind,source=/home/xbsj/gaoying/mmdetection/checkpoints,target=/home/model-server/model-store mmdet-serve

备注

realpath ./checkpoints 是 “./checkpoints” 的绝对路径,你可以将其替换为你保存 TorchServe 模型的目录的绝对路径。

参考 该文档 了解关于推理 (8080),管理 (8081) 和指标 (8082) 等 API 的信息。

4. 测试部署

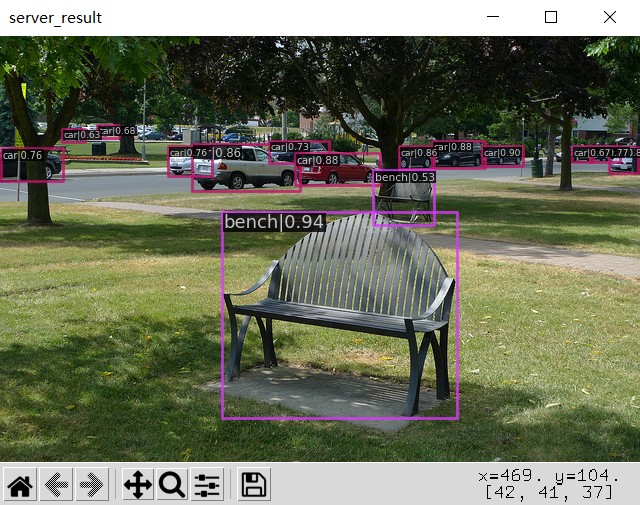

curl http://127.0.0.1:8080/predictions/yolox_s_8x8_300e_coco -T mmdetetion/demo/demo.jpg

您应该获得类似于以下内容的响应:

[

{

"class_name": "car",

"bbox": [

481.5609130859375,

110.4412612915039,

522.7328491210938,

130.5723876953125

],

"score": 0.8954943418502808

},

{

"class_name": "car",

"bbox": [

431.3537902832031,

105.25204467773438,

484.0513610839844,

132.73513793945312

],

"score": 0.8776198029518127

},

{

"class_name": "car",

"bbox": [

294.16278076171875,

117.66851806640625,

379.8677978515625,

149.80923461914062

],

"score": 0.8764416575431824

},

{

"class_name": "car",

"bbox": [

191.56170654296875,

108.98323059082031,

299.0423278808594,

155.1902313232422

],

"score": 0.8606226444244385

},

{

"class_name": "car",

"bbox": [

398.29461669921875,

110.82112884521484,

433.4544677734375,

133.10105895996094

],

"score": 0.8603343963623047

},

{

"class_name": "car",

"bbox": [

608.4430541992188,

111.58413696289062,

637.6807250976562,

137.55311584472656

],

"score": 0.8566091060638428

},

{

"class_name": "car",

"bbox": [

589.808349609375,

110.58977508544922,

619.0382080078125,

126.56522369384766

],

"score": 0.7685313820838928

},

{

"class_name": "car",

"bbox": [

167.66847229003906,

110.8987045288086,

211.2526092529297,

140.1393585205078

],

"score": 0.764432430267334

},

{

"class_name": "car",

"bbox": [

0.3290290832519531,

112.89485931396484,

62.417659759521484,

145.11058044433594

],

"score": 0.7574507594108582

},

{

"class_name": "car",

"bbox": [

268.7782287597656,

105.21003723144531,

328.5860290527344,

127.79859924316406

],

"score": 0.7260770201683044

},

{

"class_name": "car",

"bbox": [

96.76626586914062,

89.0433349609375,

118.81390380859375,

102.16648864746094

],

"score": 0.6803644299507141

},

{

"class_name": "car",

"bbox": [

571.3563232421875,

110.22184753417969,

592.4779052734375,

126.81962585449219

],

"score": 0.6668680906295776

},

{

"class_name": "car",

"bbox": [

61.34038162231445,

93.14757537841797,

84.83381652832031,

106.10137176513672

],

"score": 0.6323376893997192

},

{

"class_name": "bench",

"bbox": [

221.9741668701172,

176.775146484375,

456.5819091796875,

382.6751708984375

],

"score": 0.9417163729667664

},

{

"class_name": "bench",

"bbox": [

372.16351318359375,

134.79196166992188,

433.37713623046875,

189.78695678710938

],

"score": 0.5323100686073303

}

另外,你也可以使用 test_torchserver.py 来比较 TorchServe 和 PyTorch 的结果,并进行可视化。

python tools/deployment/test_torchserver.py ${IMAGE_FILE} ${CONFIG_FILE} ${CHECKPOINT_FILE} ${MODEL_NAME}

[--inference-addr ${INFERENCE_ADDR}] [--device ${DEVICE}]

示例:

python tools/deployment/test_torchserver.py demo/demo.jpg configs/yolox/yolox_s_8x8_300e_coco.py checkpoints/yolox_s_8x8_300e_coco.pth yolox_s_8x8_300e_coco

可视化展示:

|

|

|---|

docker终止运行的服务:

docker container ps # 查看正在运行的容器

docker container stop ${container id} # 根据容器id终止运行的容器

标签:RUN,mmcls,--,apt,server,mmdet,TorchServe,model,docker 来源: https://www.cnblogs.com/gy77/p/16612922.html

本站声明: 1. iCode9 技术分享网(下文简称本站)提供的所有内容,仅供技术学习、探讨和分享; 2. 关于本站的所有留言、评论、转载及引用,纯属内容发起人的个人观点,与本站观点和立场无关; 3. 关于本站的所有言论和文字,纯属内容发起人的个人观点,与本站观点和立场无关; 4. 本站文章均是网友提供,不完全保证技术分享内容的完整性、准确性、时效性、风险性和版权归属;如您发现该文章侵犯了您的权益,可联系我们第一时间进行删除; 5. 本站为非盈利性的个人网站,所有内容不会用来进行牟利,也不会利用任何形式的广告来间接获益,纯粹是为了广大技术爱好者提供技术内容和技术思想的分享性交流网站。