标签:guest Hypervisor KVM VM mode Qemu like

instruction set to provide isolation of resources at hardware level. Since Qemu is a userspace process, the kernel treats it like other processes from the scheduling perspective.

Before we discuss Qemu and KVM, we touch upon Intel Vt-x and the specific instruction set added by vt-x.

Vt-x solves the problem that the x86 instructions architecture cannot be virtualized.

Simplify VMM software by closing virtualization holes by design.

Ring Compression

Non-trapping instructions

Excessive trapping

Eliminate need for software virtualization (i.e paravirtualization, binary translation).

Adds one more mode called the non-root mode where the virtualized guest can run. Guest doesn’t necessarily have to be an operating system though. There are projects like Dune which run a process within the VM environment rather then a complete OS. In root mode it’s the VMM which runs. This is the mode where kvm runs.

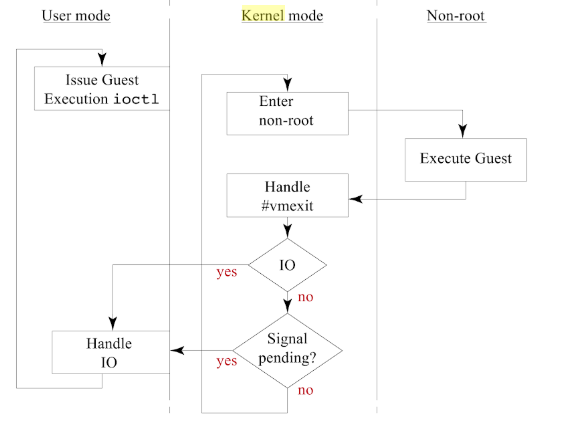

Transitions take place between the non-root mode to root mode via a VM exit and similarly from root mode to non-root mode via a vm entry. The registers and address spaces are swapped in a single atomic operation.

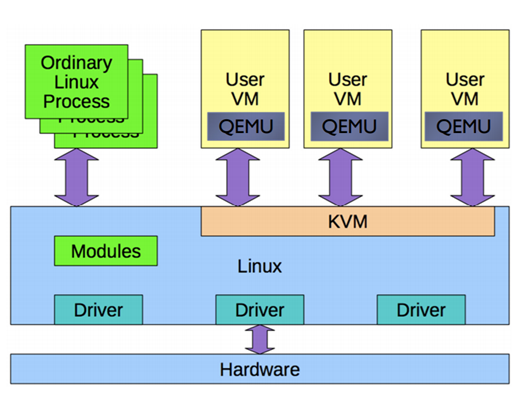

Qemu runs as a user process and handles kvm kernel module for using the vt-x extensions to provide the guest an isolated environment from memory and cpu perspective.

This is how it looks like

Qemu process owns the guest RAM and is either memory mapped via file or anonymous. Each vcpu provided to the guest runs as a thread on the kernel. This gives the advantage that the vcpu are scheduled by the linux scheduler like any other threads. The difference is just the code which gets executed on those threads. In the case of guest since it’s the machine which is virtualized, the code executes software BIOS and also the operating system.

Qemu also dedicates separate thread for I/O. This thread runs an event loop and is based on the non blocking mechanism and registers the file descriptors for i/o. Qemu can use paravirtualized drivers like virtio to provide guests with virtio devices like virtio-blk for block devices and virtio-net for network devices.

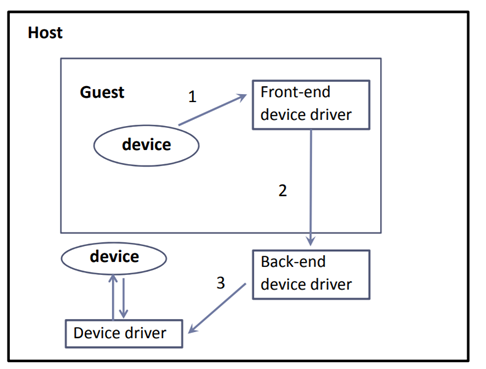

Here you see Guest within the Qemu process implements the front end driver where as the Host implements the backend drivers. The communication between front-end and back-end driver happens over specialized data structures called virt-queues. So any packet which originates from the guest is first put into the virt queue and the host side driver is notified over a hypercall, to drain the packet for actual processing to device. There can be two variations of this packet flow.

- Packet from guest received by Qemu and then pushed to backend driver on host. Example being virtio-net

- Packet from guest directly reach the host via what is called a vhost driver. This bypasses the Qemu layer and is relatively faster.

Also there is a hotplug capability to make the devices dynamically available in the guest. This allows to dynamically resize the block devices as an example. There is also a hotplug-dimm module which allows to resize the RAM available to the guest.

Finally creation of a VM happens over a set of ioctl calls to the kernel kvm module which exposes a device /dev/kvm to the guest. In simplistic terms these are the calls from userspace to create and launch a VM

KVM CREATE VM : The new VM has no virtual cpus and no memory

KVM SET USER MEMORY REGION : MAP userspace memory for the VM

KVM CREATE IRQCHIP / …PIT KVM CREATE VCPU : Create hardware component and map them with VT-X functionalities

KVM SET REGS / …SREGS / …FPU / … KVM SET CPUID / …MSRS / …VCPU EVENTS / … KVM SET LAPIC : hardware configurations

KVM RUN : Start the VM

KVM RUN starts the VM and internally it’s the VMLaunch instruction invoked by the kernel module to put the VM code execution in non-root mode and post that changing the instruction pointer to the location of code in guest memory. This might be an over simplification as the module does much more to setup the VM like setting up VMCS(VM Control Section) etc.

Disclaimer : The views expressed above are personal and not of the company I work for.

标签:guest,Hypervisor,KVM,VM,mode,Qemu,like 来源: https://www.cnblogs.com/dream397/p/14274297.html

本站声明: 1. iCode9 技术分享网(下文简称本站)提供的所有内容,仅供技术学习、探讨和分享; 2. 关于本站的所有留言、评论、转载及引用,纯属内容发起人的个人观点,与本站观点和立场无关; 3. 关于本站的所有言论和文字,纯属内容发起人的个人观点,与本站观点和立场无关; 4. 本站文章均是网友提供,不完全保证技术分享内容的完整性、准确性、时效性、风险性和版权归属;如您发现该文章侵犯了您的权益,可联系我们第一时间进行删除; 5. 本站为非盈利性的个人网站,所有内容不会用来进行牟利,也不会利用任何形式的广告来间接获益,纯粹是为了广大技术爱好者提供技术内容和技术思想的分享性交流网站。